1 / Three Development Themes

2 / Consultation

3 / Audio Analysis Deck

THREE DEVELOPMENT THEMES

I’ve mapped out my 3 development proposals according to the three themes that I am aiming to explore for my dissertation discussion, namely through algorithmic, scientific and psychological themes. Hence, my approaches and methods in building the prototype involve ways that relate back to these themes, through data collection, user interaction, prototype material and functionality etc.

Theme:

Psychological

Starting off with the psychology-driven prototype proposal, I wish to continue on my 3D data object experimentation of using textures and shapes to represent our emotions and tone of voice in the online space. However, this time focusing less on the physical sense of touch, as I intend to transition my prototypes to be more digital to keep the outputs of these three themes consistent (while I’ll still be withholding some elements of physicality in the prototypes, be it through the interaction touchpoints or during the data collection).

Moving back to the psychological outcome, to develop this idea, I went to research on how our online behaviour is being influenced to gain a better understanding of what are the factors that affect behaviour in what we do and how we think in the online environment. From there, I thought about how online behaviour is linked to how we present ourselves in the online space, which led me to think of people create or adapt to different ways of texting to be able to vocalise ourselves more accurately in an attempt to portray our emotions and tone of voice. Hence, I started researching about the way people type and how this relates to our psychological behaviour as well. I found lots of interesting articles and insights into how the simple act of typing on a keyboard can reflect our identities in a way we never thought was possible–the choice of keys, the rhythm of our keystrokes and the use of special characters such as emoticons or emojis to express ourselves.

To carry out this prototype, I intend to create a messaging bot or a forum to simulate how humans interact and converse with each other, and by gathering data from the user’s responses and translating their keystrokes into findings about their typing rhythm and pattern that will be able to map into visuals that mirror their unique way of communicating through texts.

I started off by trying to look for tutorials on how to create a keylogger which all weren’t successful as I had trouble converting the data into txt. files.

Next, I started looking into existing keystroke tools that are already programmed to record what we type. I found one which isn’t technically a tool that is designed to track what we type, but I thought that the basic mechanics of generating the keys that had been pressed down was good enough to start with. Thus, I used ‘inspect’ to find out how they programmed the site to do so.

Now that I had the key pressed data on the site, I was missing the time intervals function to record the pauses in between keys. For this, I tried searching for tutorials that taught how to apply the function to measure intervals between keys but there were none, hence I went to search forums. I found a couple which didn’t really work when I tried on my side and only managed to find one that I could understand and finally work (after several hours of trying :)) The problem with the code was that I had to adapt it in order to fit the previous sketch containing the key pressed codes which made it hard for me to stitch these two together and make them work with one another.

Theme:

Scientic

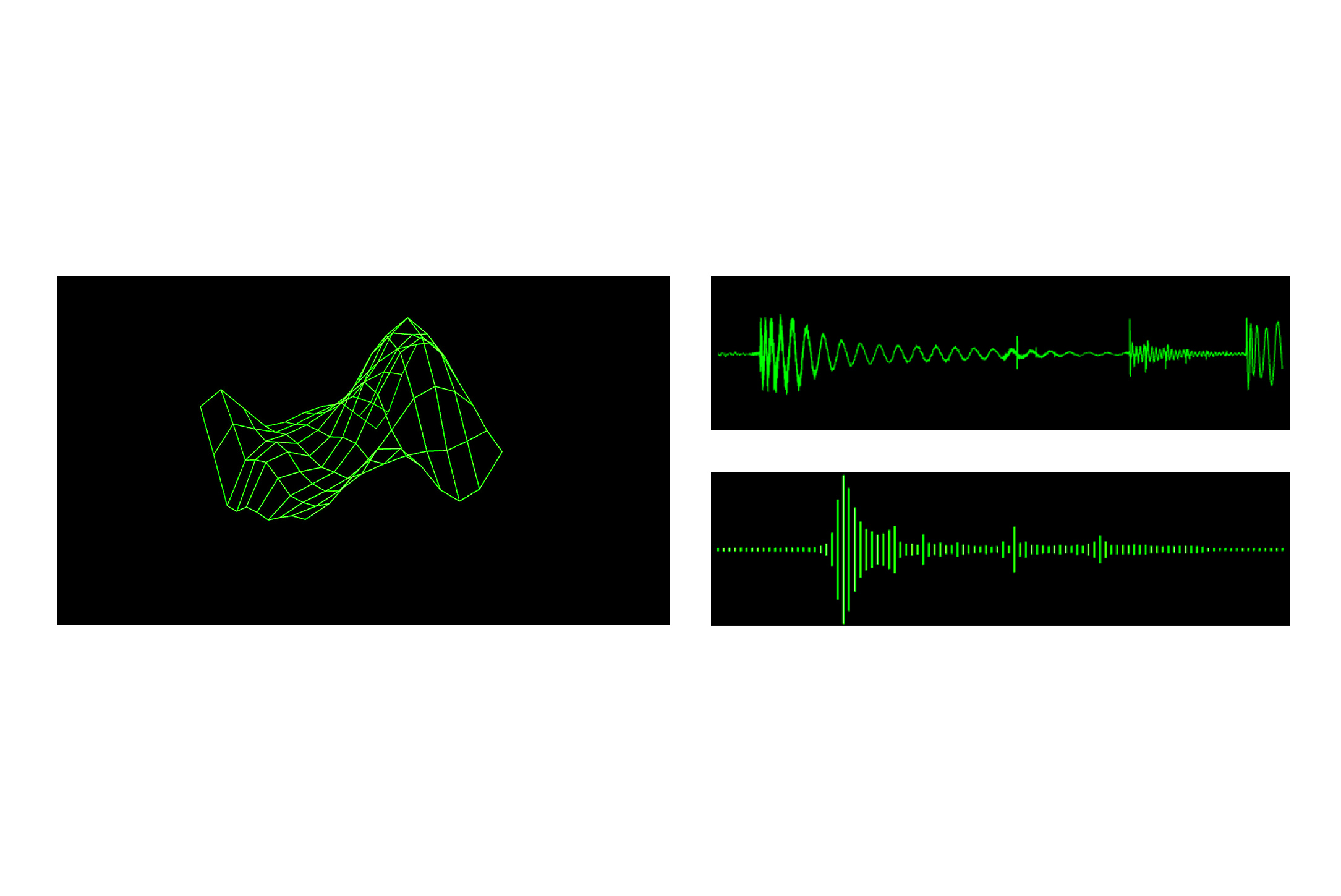

The voice as personality research will fall under the scientific theme due to its scientific approach in analysing voices for personality traits. There is not much change for this idea, the data collection is still done through the user input of their voices and the analysis will be conducted on the vocal traits to determine the personality of the user. The analysis will be presented through audio waves and sound graphs. Patterns generated from these graphs will then be used to decipher any personality traits. For instance, multiple readings I’ve found recently have stated that the vocal pitch of our voices can showcase characteristics of extroversion and introversion. Therefore, I think that from the insights from the audio waves and sound graphs can be used to map visuals of user personalities. For this, I followed a Touch Designer tutorial on how to build an audio analysis deck that can be used to analyse audio files through various data presentation formats. Something I would need to figure out by myself was how to use detect real-time audio signals through the microphone instead of an embedded audio file in order to use this audio analysis deck for user testing.

The output for this prototype is still a little unclear. I want to use typography as a way to visualise these personalities traits and I found that voice and typography are interconnected concepts of linguistics, but a different form of delivery, where voice conveys meaning through sound and typography conveys meaning through visuals. As such, I wanted to experiment with typography as a way of mapping vocal traits and generating personality identities through it. The current idea is for the vocal pitch and frequency to manipulate and distort the letterform.

Theme:

Algorithmic

This theme is the most relevant to my current research area as it deals with the computational side of research, and I think my current focus on generative visual systems and online identities can be fit into this theme. However, because of its relevance, I found it difficult at the same time to decide which parts of my research that I would like to extract and draw attention to through this algorithmic theme. Is there something from my readings that I’d like to focus on specifically, a type of data or identity or output format? From there, I filtered down my RPO into sections, which lead me to these two keywords: behavioural data and the quantified self. I thought that these are the two areas that are the most algorithmic in their research and case studies. Hence, for this theme, I wanted to focus on these two concepts for the prototype. The idea for this theme is quite vague at the moment–when I think of behavioural data, this thought leads me to digital footprint which reminds me of the experiment I did in Term 1 Week 5, on visualising our digital footprint through screentime data. However, I am hesitant to venture down this route again as I didn’t want to create a data visualisation as the prototype again since, from my experiments, the sense of identification with a data viz was not strong so this may be something I want to take note of when I’m working on the output for this prototype. I may need to focus on humanising this aspect of visualisation to avoid creating something too abstract or technical which may feel out of touch with the user.

To carry out this prototype, my current idea is to collect data of the user’s screen time and translate it into a generative visual system.

I’ve got a few ideas for how to collect data: either through a physical toolkit where users can stamp their digital footprint on a card–the stamps will be carved with different shapes to represent different types of activities and the colour of the stamps will represent the frequency of the activity participated–in a way this is a practice of manual tracking of our digital footprint, or through an image recognition processing of the user’s screentime data when they position their mobile device’s screen in front of the computer’s camera.

For the visual mapping, I was thinking of something systematic to show the characteristics of an algorithmic behaviour. For example, a grid-like artefact where the ‘inflated’ cells are where the category of activities are most frequented based on how large the cells are. The cells that are not distorted are the types of activities that are dormant.

CONSULTATION

Vikas's Comments from Cohort Sharing:

Metaphor

flowers

taxonomies

looks promising

Above are some of the comments by other lecturers during the cohort sharing

Dissertation-Sequence of research

–User research first

–What you know about the target audience

–What is valuable to them

–Prototyping-generative research

–User testing &user feedback

–At the end-if the research can revisit the prototype after user testing

Working on the devleopment proposals made me question the overlaps between algorithmic and scientific, to which I was able to get some clarifications on

–Algorithm could focus on the data that is been recorded

–Data scientists engineers designers tech companies

–Scientific looks at more complicated data voice and movement

–Lab specific

–Academic based

Dissertation-Discussion

Ideation starting points:

–Initial experimeents that made/solidy your idea or focus on a particular aspect of the idea

–Important insights from unsuccessful experiments are necessary because of this reason

–Experiments can add to the success by getting rid of clutter

Development Proposals

–For the keystroke simulation, consider using a Message board or public forum

–Collect what they type in their own computer might be issue due to user privacy

–Record the keys pressed, the intervals, the press and release time(time spent on every key), measure pauses

–For this to work, try using .js + html

–Analyse in code is it rhythmic or fast or slow

–For the algorithmic data collection of screentime data, you can consider allowing them to take a screenshot and send it over to the computer

–Output: Create a hitemap in processing

–Generate Slices

–Cut them out by hand or lasercut

AUDIO ANALYSIS DECK

Update on Touch Designer Progress:

I’m slowly getting better at using Touch Designer, also becoming faster at using the functions and familiarising myself with the shortcut keys as compared to last week!

From last week’s experiment using Touch Designer as a method of gathering audio data and translating it into visuals, I became curious about how else Touch Desinger could be of use in collecting, recording and measuring audio. While browsing through the other tutorials from the same youtuber, Elekktronaut, I found another tutorial done by him, where he teaches us how to embed audio files into data that can be read through audio analyzers and produced into soundwave and audio graphs.

However, because this tutorial only shows how to insert and use a music track as an audio file in the analysis deck, it does not show how I could use voices as a user input in real time, capture it and convert that into data that can be analysed. This is something that I would need to further explore by myself–maybe I could use what I learnt from the previous tutorial on how to use the computer microphone to record audio.

Other than the audio aspect of this tutorial, another thing that I learnt from this tutorial was how to use Touch Designer to create an interface. Using containers to build grids and sections within a ‘page’ was a really helpful and easy way of understanding how to layout in Touch Designer.