1 / Keystroke Data

Collection Interface

2 / Prototype Photoshoot

KEYSTROKE DATA COLLECTION INTERFACE

After consulting last week on how to link my keystroke logger which was done in html to analyse the data and generate the affective data objects which was designed in Blender, I learnt that I should have built both in the same software to ease the transitioning of files and ensure a smooth working interface.

-

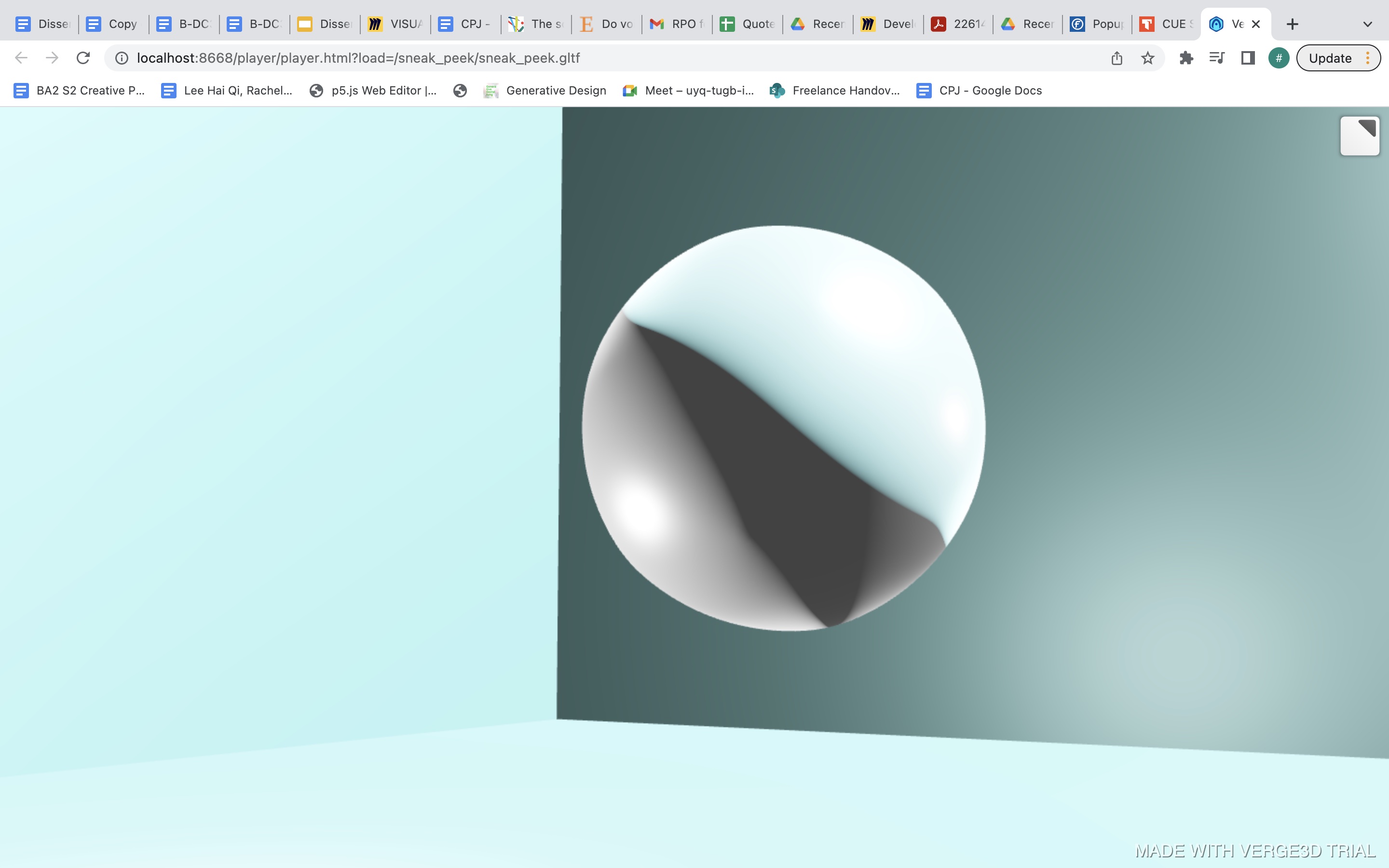

I tried to find ways in which I could make the object files interactive in Blender itself, which led me to discover and learn how to use VERGE 3D, an extension of Blender. In this above tutorial, it shows how we can create an interactive experience within the Blender 3D space. By using VERGE 3D inputs, we could apply codes that could assign functions to objects. For instance, by clicking on a 'button', the object would change.

-

However, despite following multiple tutorials, the VERGE 3D method didn't quite work as successfully, due to the animation of the objects. When the files are transferred onto the serve, they become static objects instead of moving ones, which was a problem I've yet to solve.

Additionally, VERGE 3D interactive functions were limited and could not be connected to a separate html, where my keystroke logger was held. Therefore, I had to ditch this method and search for other ways

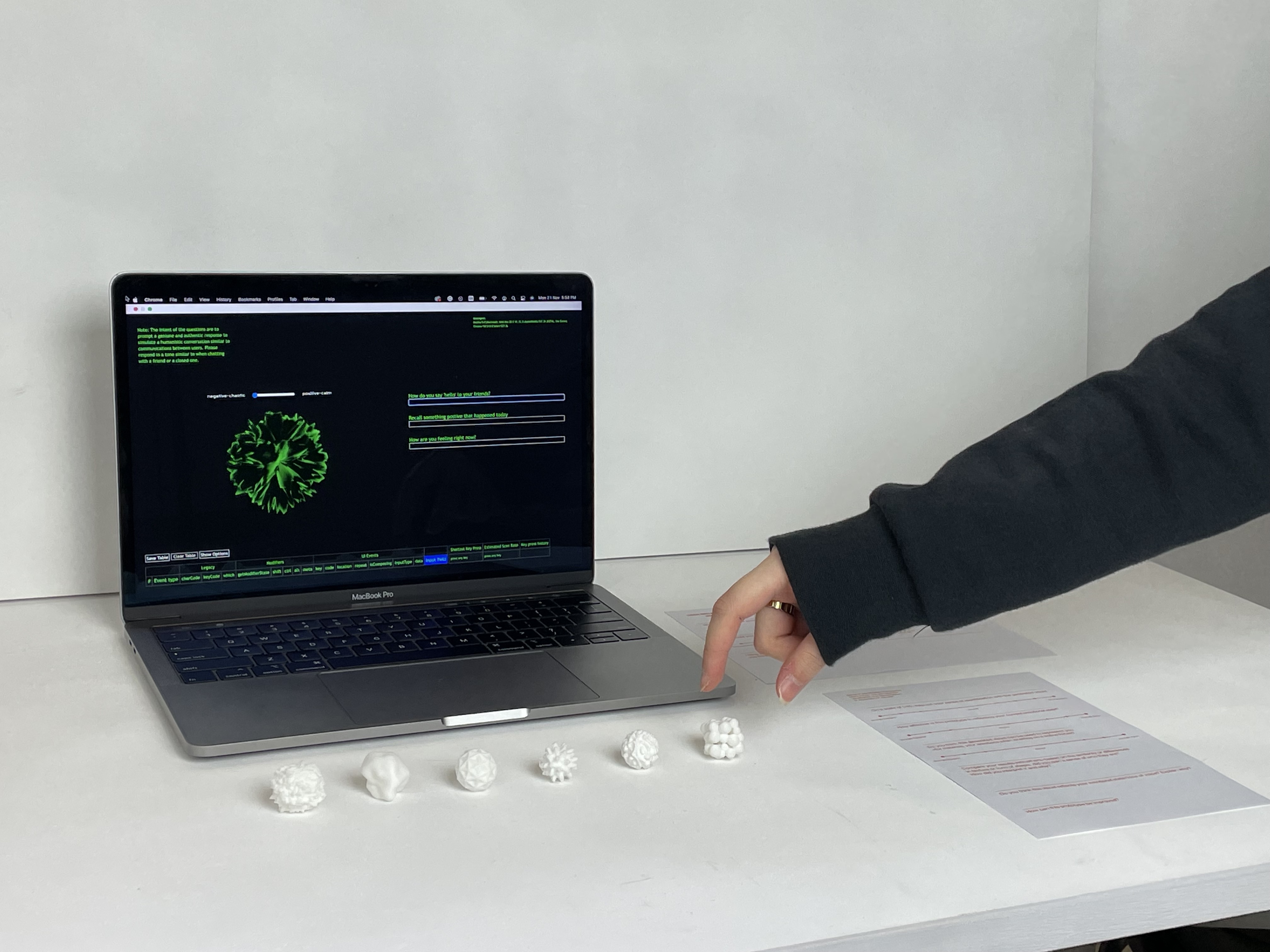

At last, to make the interface complete and ready for user-testing, I decided to use HTML as the main interface instead of the 3D objects. By converting my 3D designs into obj. files and importing them into p5.js, I was able to add it as an iframe that could be interacted with over on my keystroke logger interface. I mapped the objects onto a slider, and when the slider reaches a certain position, a new object is generated.

Despite this interface not being to function perfectly–with the data objects being generated as users respond and type–I think the interface still works in presenting the separate components together, having a simulation of the keystroke analysis and the selection of data objects by the user.

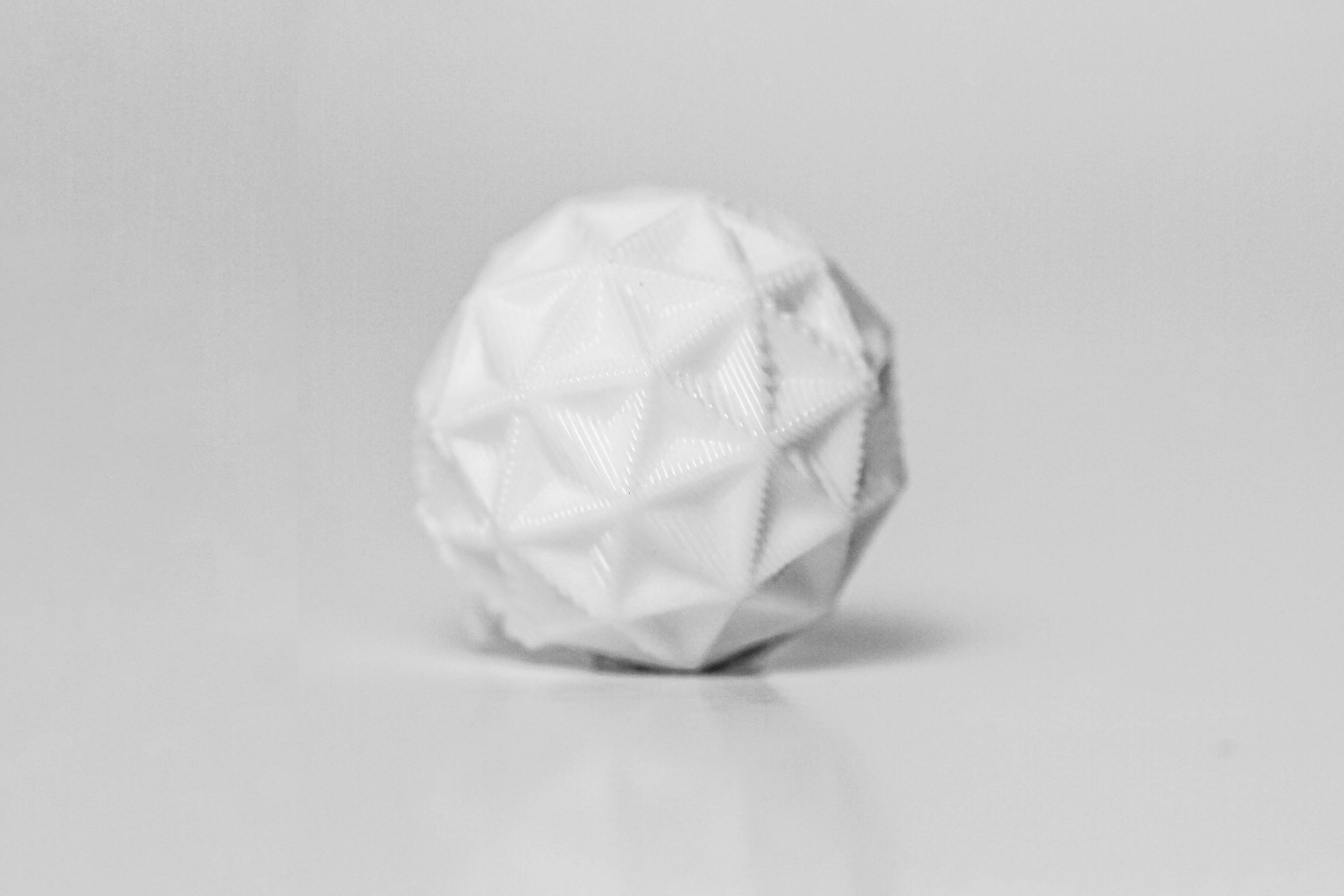

PROTOTYPE PHOTOSHOOT

Fromt the consultation last week, I wanted to improve the shots I took for the prototype in order to document the physical prototypes in a better photographic composition. As such, I managed to borrow the micro-lens camera from Andreas to get some close up shots of the texture of the 3D objects as texture–complexity of shapes made up quite a significant portion of my 3D data objects prototype research.

-

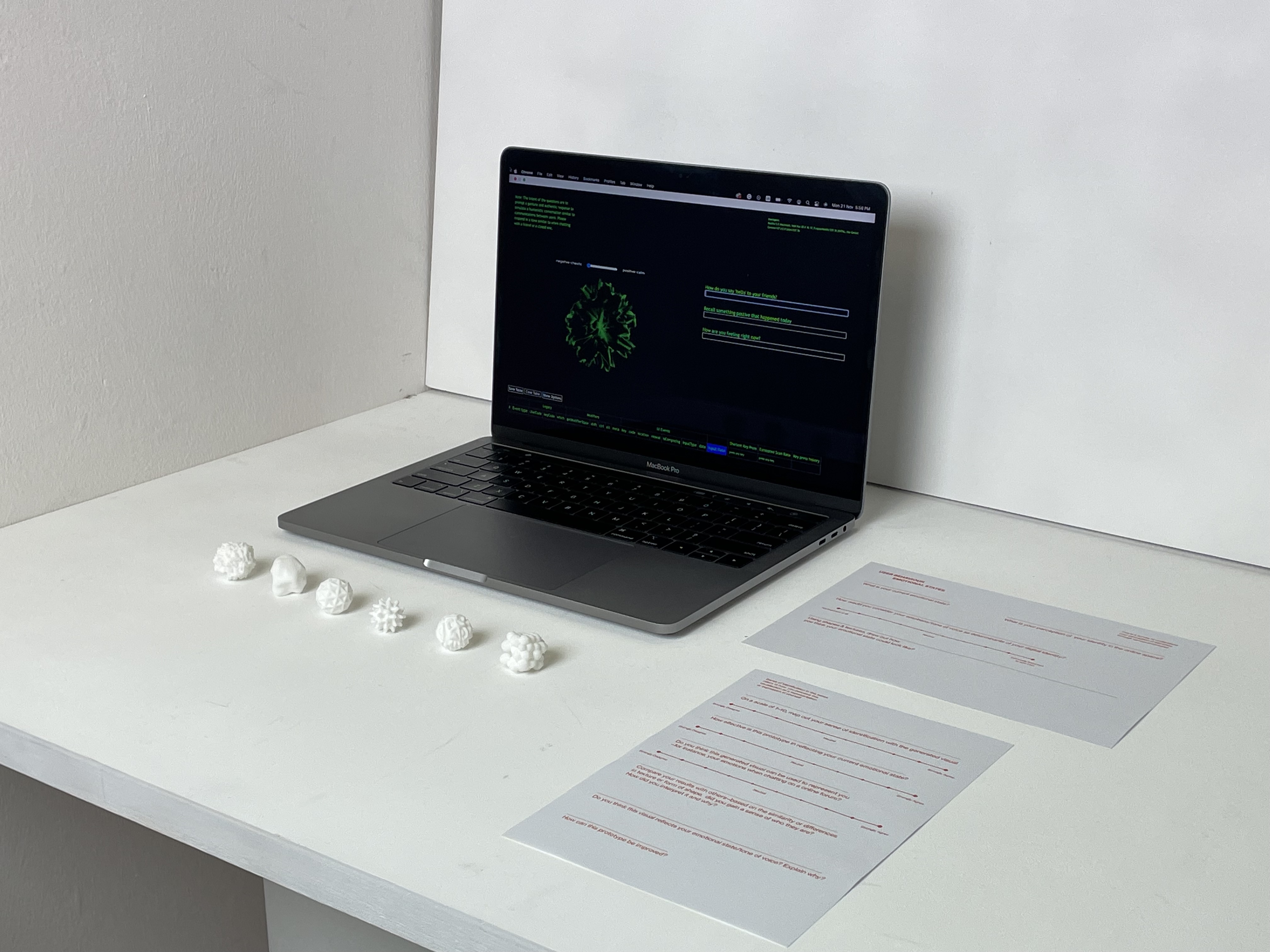

Testing the setup, lighting and camera settings using Aimee's coffee can as the subject.

WWe did have some struggles with learning the camera and adjusting the correct lighting, but after a few hours we finally got the hang of it!

Above is the setup we have created at the back of D301, surprisingly, there were so many discarded materials such as foam boards, A1 sized papers and random planks that we were able to make use of for our backdrop. For the makeshift table, we pulled over the circular pedestal to create a stand for our table surface. And using the existing standing white boards as the walls of our photography space.

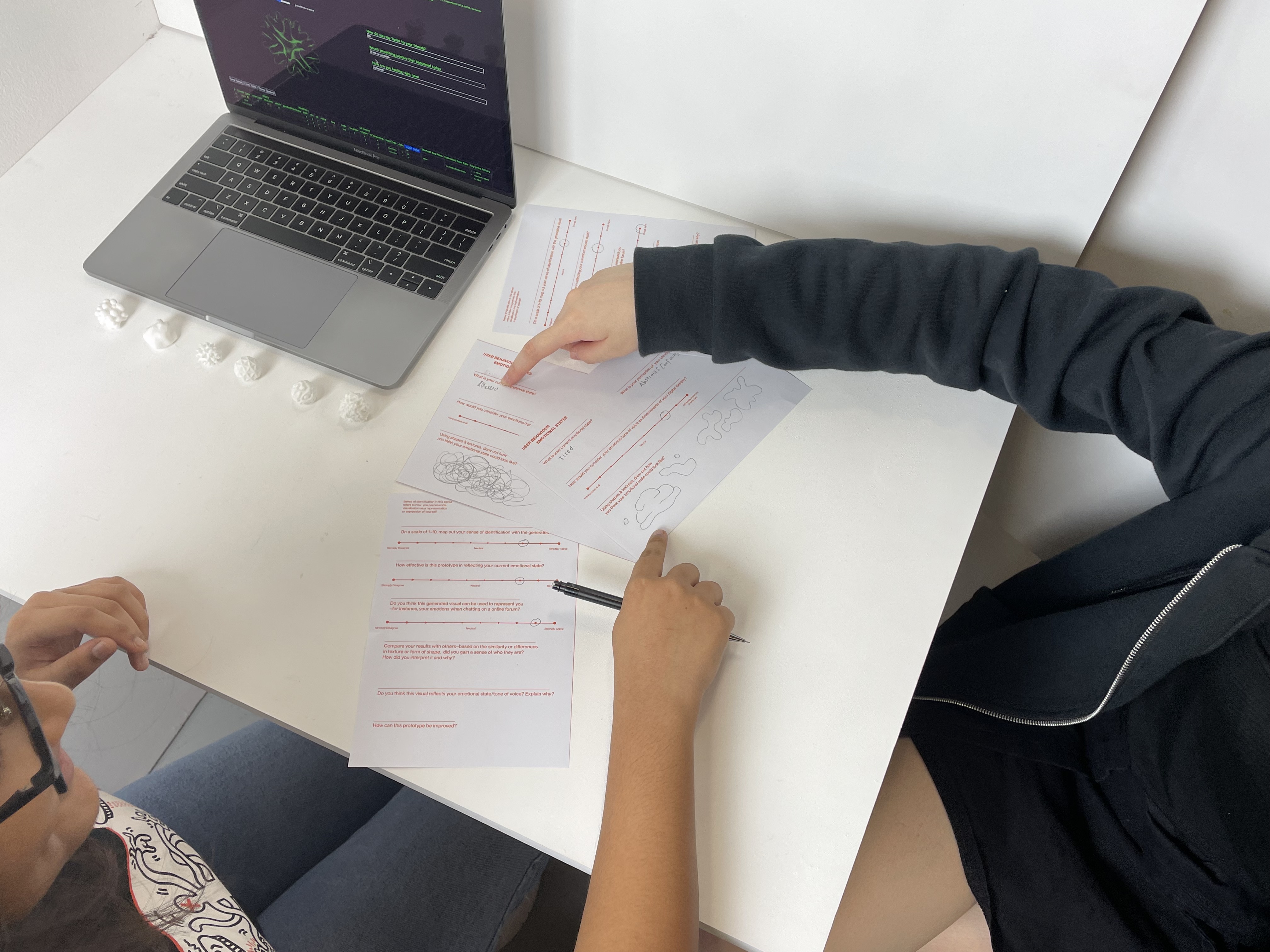

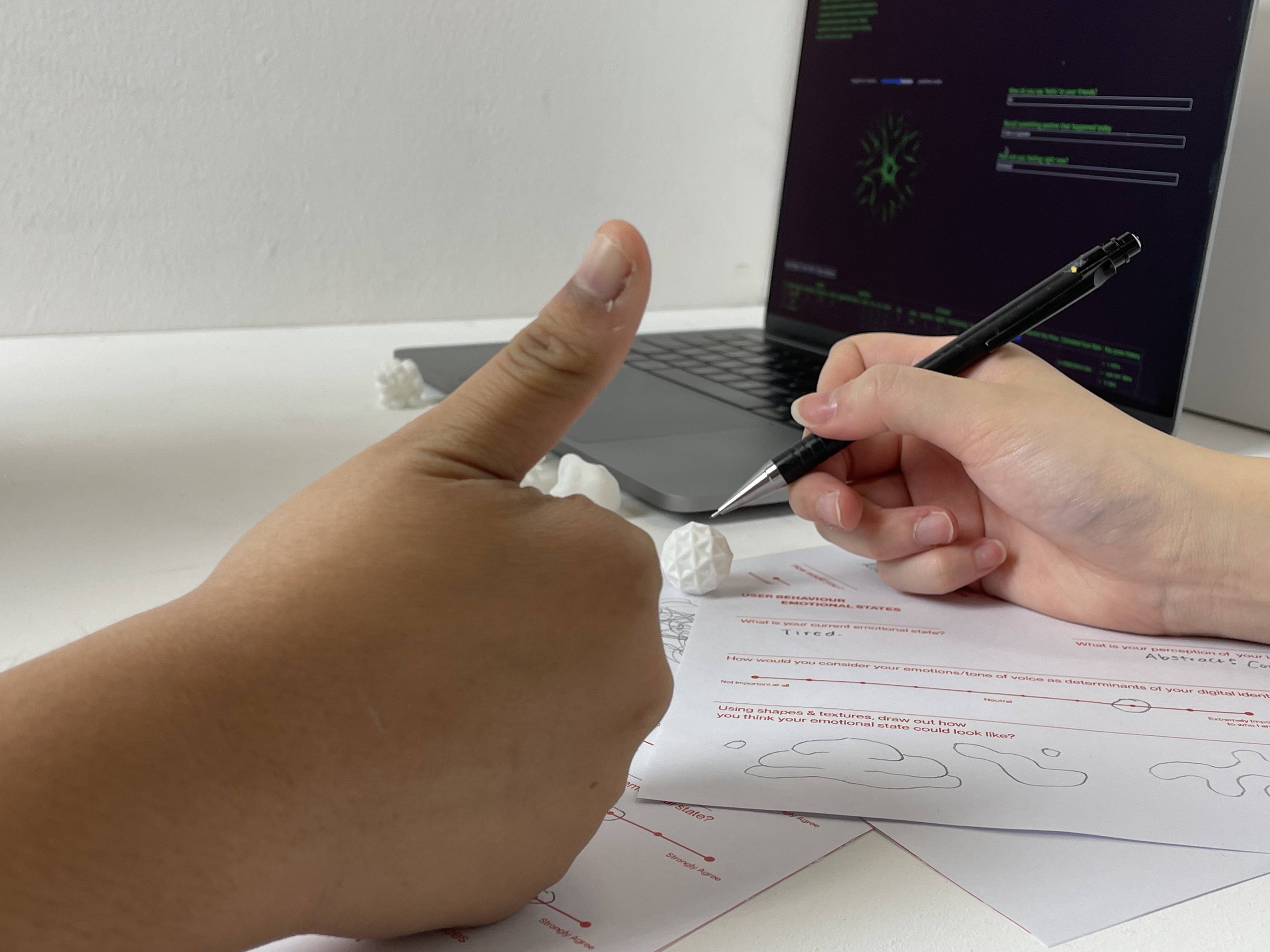

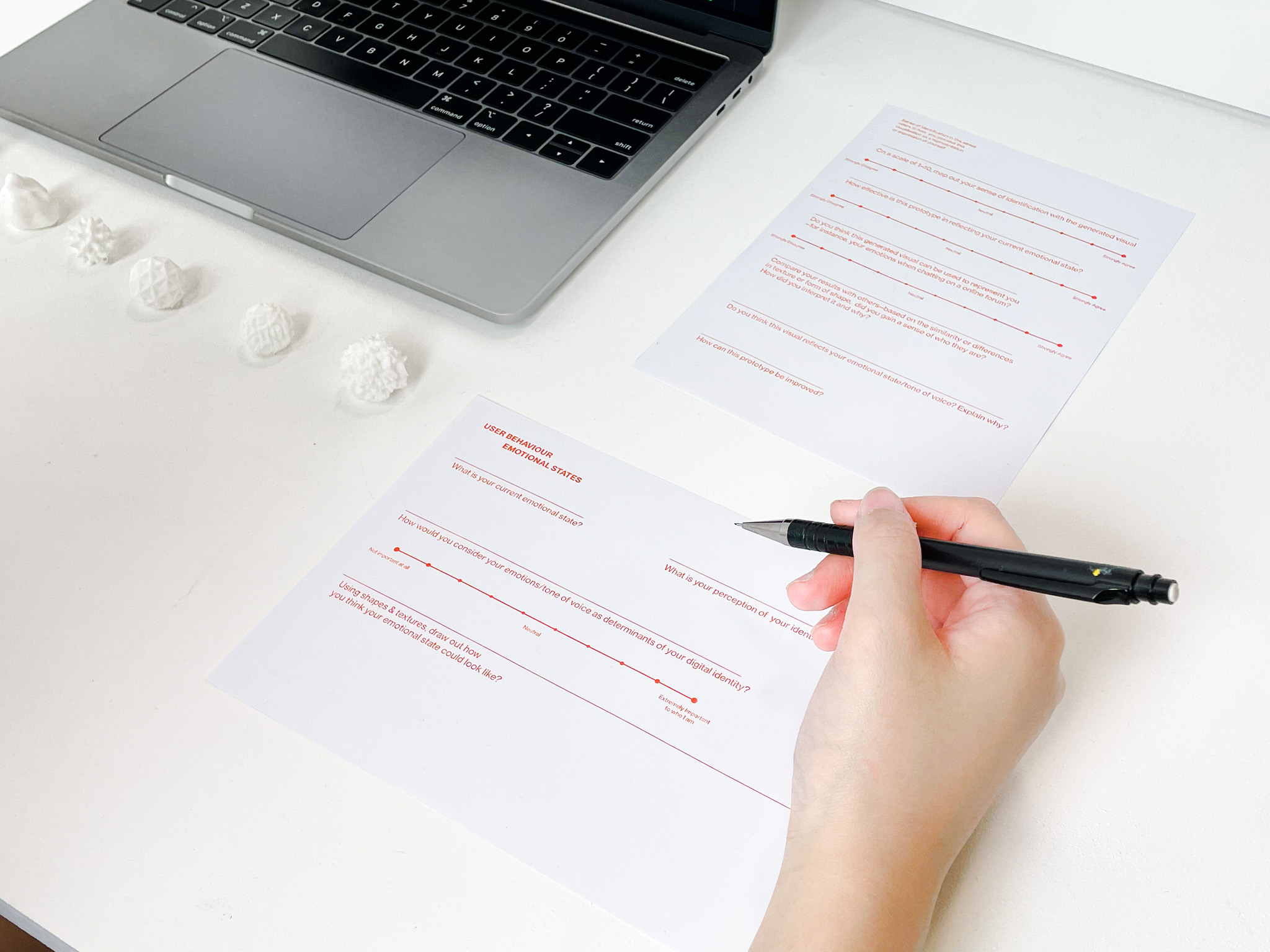

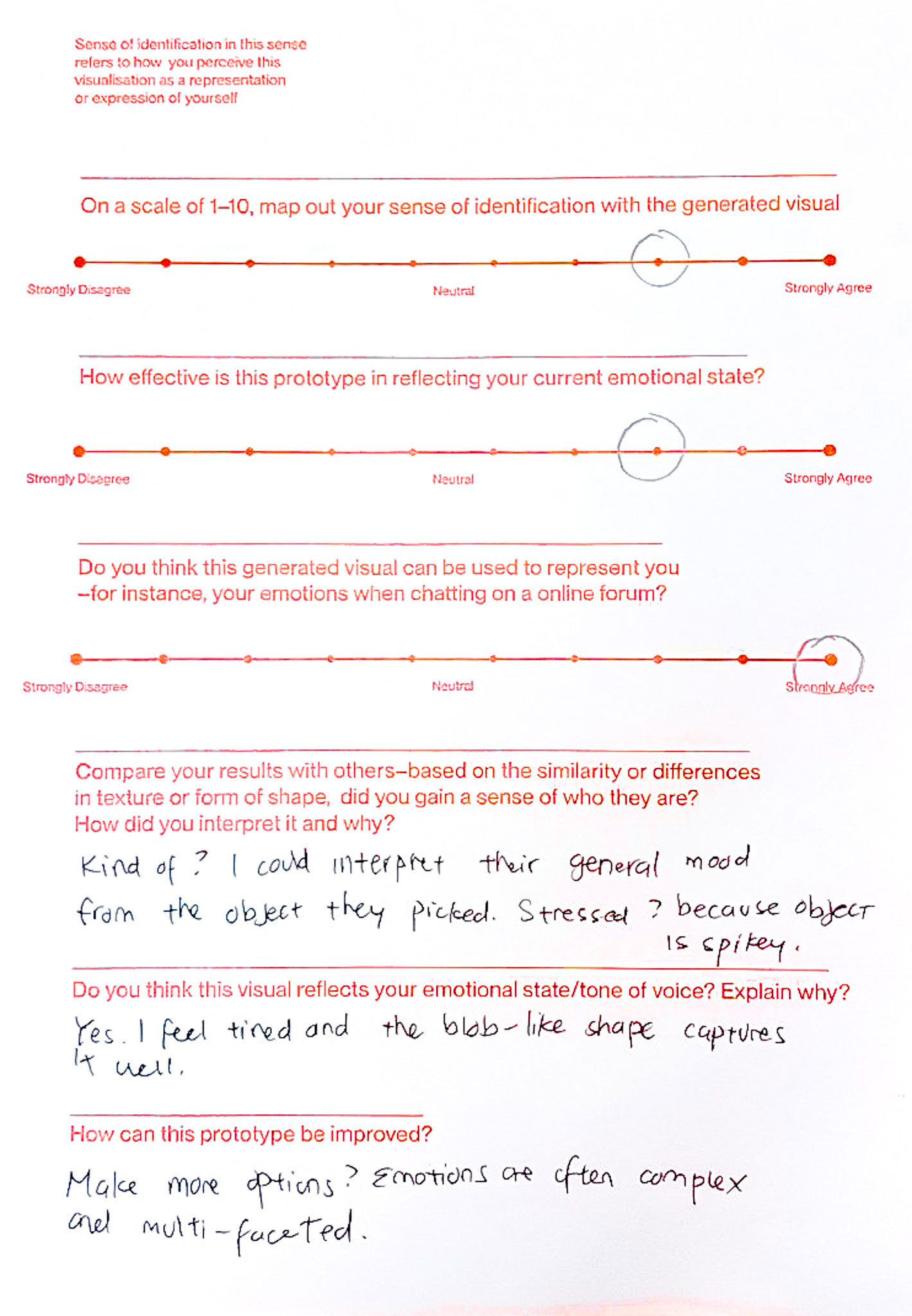

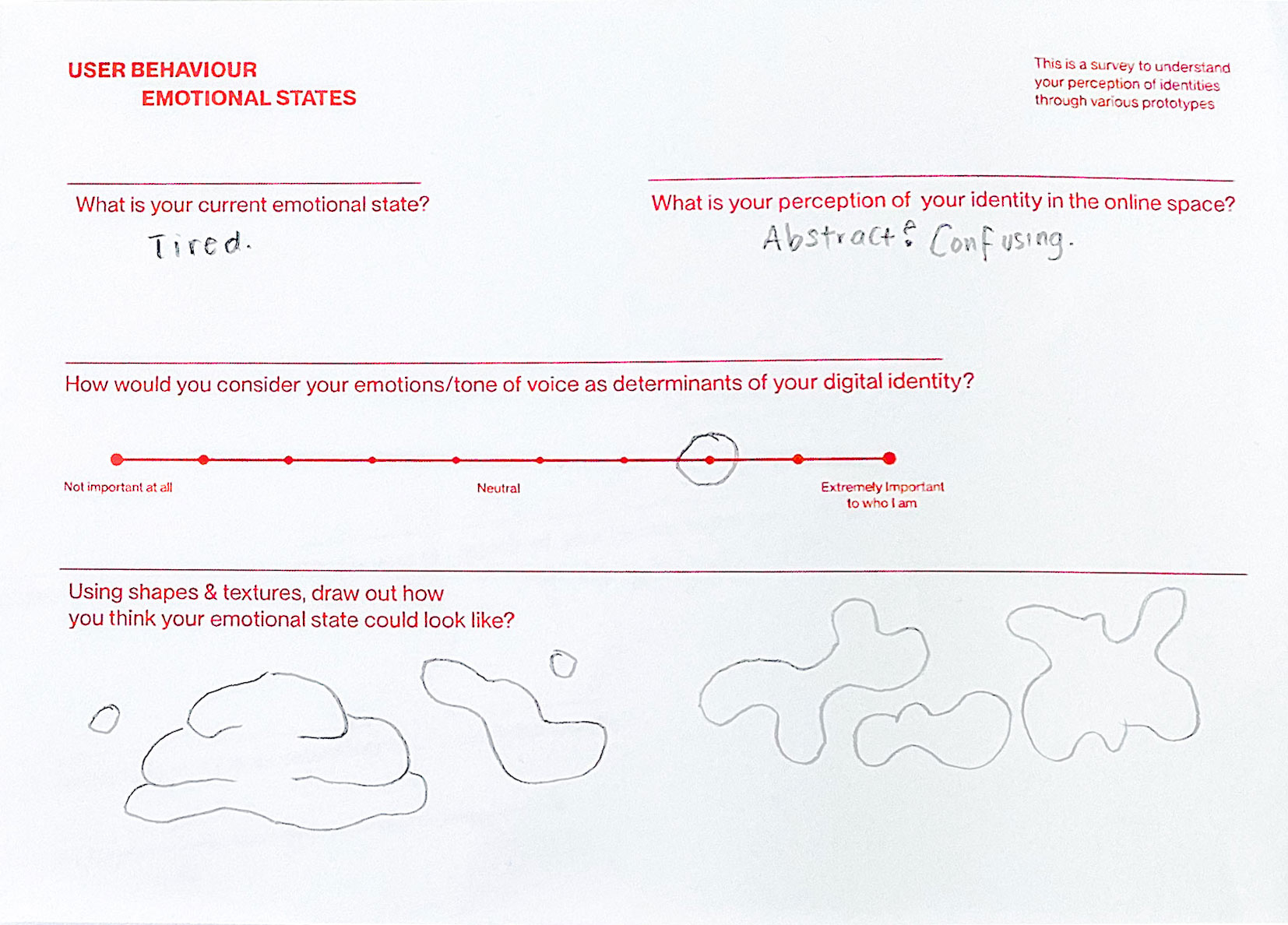

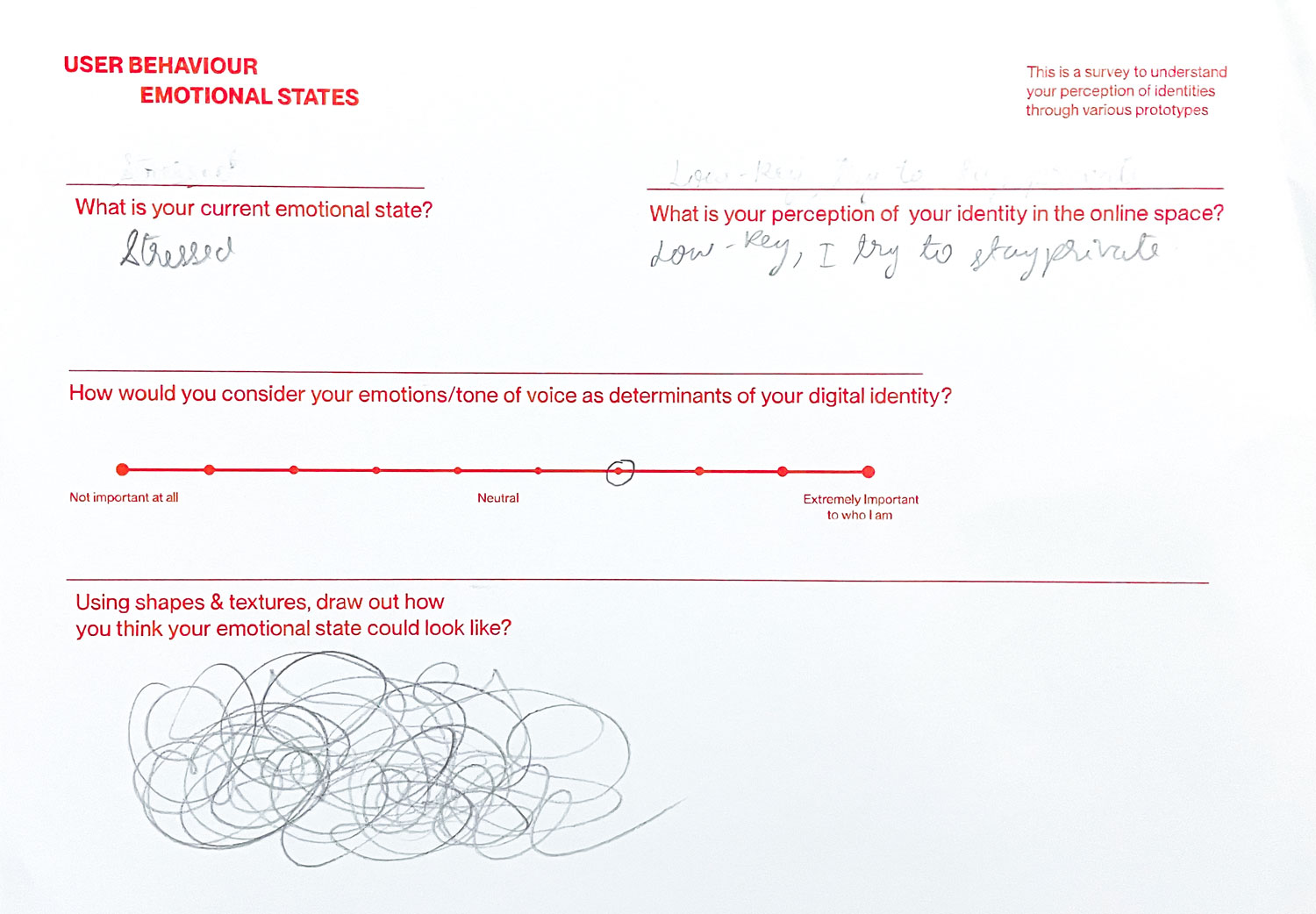

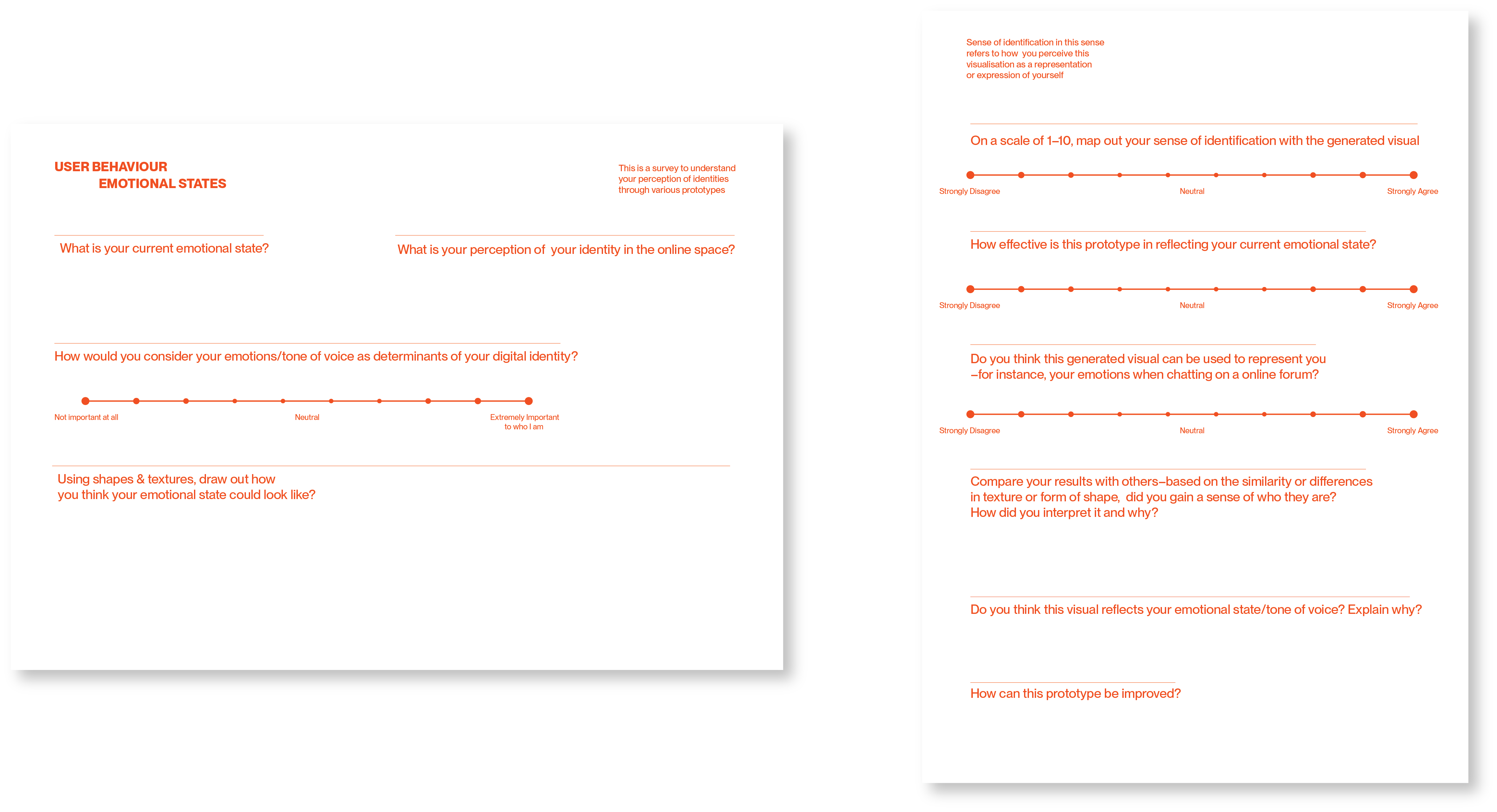

Based on the Social Identity and Personal Identity Scale (SIPI) from my user-research, I formulated a set of questions to assist in the evaluation of the prototypes. The survey on the left is taken prior to the testing, to gain a sense of their current perception of emotions, tone of voice and identities. In the last row, users are prompted to sketch out what they think their emotional state would look like through shapes and textures, to settle them in the mindset of using shapes to visualise emotions.

The SIPI scale on the right is conducted after the user-testing to evaluate the prototype. The questions also lead to insights about their change in perception on seeing identities through emotional states. Additionally, one of the sections prompts the participants to compare their data objects with others–this section attempts to simulate the idea of social identities and collectivism as conversations between participants are generated through the commonalities in data objects, building a sense of connection and identification as well. Also this helps to gauge if the data objects could indeed represent one's emotional state and be the perceived identity of the user.

I've also conducted some user-testing on Aimee, Ananya and Sadhna, where I got them to test out the 3D affective data objects prototype–digital interface and the physical artefacts.

-

RESULTS

Results from the user-testing do show that participants have a high sense of identification with the data objects! Additionally, I noticed that what participants draw as their representation of emotional states before the test and which object is being generated to match them afterwards have some sort of resemblance in the emotional valence and behaviour of the visual.

-

FEEDBACK

One of the feedback I've received from the user-testing was to create more affective data objects to cover a wider range of emotional states as a way to meet the complexity of human emotions